Running the TensorFlow examples in MicroPython

The MicroPython interface to TensorFlow

The MicroPython interface to TensorFlow is implemented as a module written in C. It contains several Python classes:- interpreter: gives access to the tflite-micro runtime interpreter

- tensor: allows to define and fille the input tensor and to interpret the output tensor

- audio_frontend: needed for the wake words example.

Running inference on the ESP32

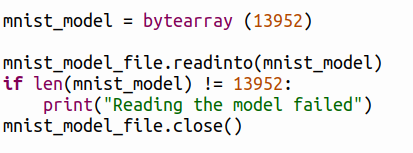

After having defined and trained the model with TensorFlow, it must be converted into a TensorFlow Lite model, which is an optimized FlatBuffer format identified by the .tflite extension. This file must be transfered to the MicroPython file system. In my examples I create a "models" folder on the ESP32 into which I save the models. You can easily find the size of the model with the ls -l command on the PC. The model is read into a bytearray: Once the model is loaded we can create the runtime interpreter:

Once the model is loaded we can create the runtime interpreter:

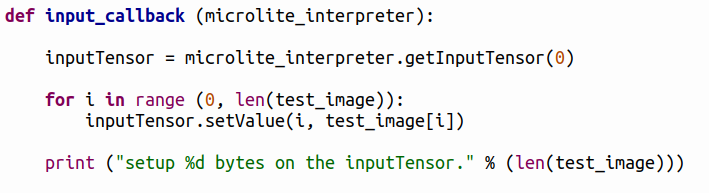

Finally the output_callback is called in which you access the output tensor and interpret it.

Finally the output_callback is called in which you access the output tensor and interpret it.

The classes and their resources

tensor

The tensor class has the methods:- getValue(index)

- setValue(index,value)

- getType()

- quantizeFloatToInt8()

- quantiteInt8ToFloat()

The interpreter

The interpreter has methods to get the input and output tensors and for invocation:- getInputTensor(tensor_number)

- getOutputTensor(tensor_number)

- invoke()

- hello world MicroPython

- person detection MicroPython

- wake word detection MicroPython

- magic wand MicroPython

Comments

| I | Attachment | History | Action | Size | Date | Who | Comment |

|---|---|---|---|---|---|---|---|

| |

input_callback.png | r1 | manage | 29.9 K | 2023-10-12 - 08:37 | UliRaich | |

| |

interpreter.png | r1 | manage | 7.2 K | 2023-10-12 - 08:27 | UliRaich | |

| |

invoke.png | r1 | manage | 3.0 K | 2023-10-12 - 08:39 | UliRaich | |

| |

output_callback.png | r1 | manage | 22.4 K | 2023-10-12 - 08:37 | UliRaich | |

| |

readModel.png | r1 | manage | 22.6 K | 2023-10-12 - 08:23 | UliRaich |

Ideas, requests, problems regarding TWiki? Send feedback